Test Pyramid

Why do testing at all?

It goes without saying that modern web development cannot be done without some form of automated testing. Working on a code base without a solid test suite is just creating problems in the long run and inviting a lot of future pains when your new feature breaks the app, and fixing the bug just creates a slew of others. That’s why you have to test, right? Right?!

How much testing is enough?

One recommended way of doing testing is the TDD methodology (see David’s post on TDD right here). But even when following TDD, there can sometimes be many uncertainties regarding what to test. You should always test the happy path (the feature works as intended), the sad path (the feature fails gracefully) and the what if scenarios (edge cases). However, how do you know when you have tested enough? Even numeric-like testing coverage can be misleading in cases when you do not apply the appropriate tests in the appropriate places.

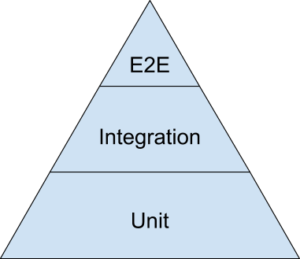

Testing pyramid

Your test suite should be efficient above all, and one way to achieve that efficiency is through the concept of a Test Pyramid. A test pyramid, in a nutshell, means having a few strategic end-to-end (integration) tests sitting on top of many more isolated, but focused, unit tests.

End-to-end / Acceptance tests

End-to-end, or acceptance tests, form the top level of your test suite and focus on testing the whole stack of the application. They serve to confirm that the flow of the application is behaving as expected, from start to finish, and that your feature can be completed successfully.

Acceptance tests simulate the way a real user would use your application to achieve their objective (a use case), by clicking links, filling out forms, and navigating different areas of the whole system in succession.

These tests are much slower and longer than unit or controller tests. They can also get messy. When not careful, they can become brittle over time and in need of revisions, as the underlying parts of the system change.

[code lang="ruby"]

# an example of an acceptance test

require "rails_helper"

class CreateProductTest < Capybara::Rails::TestCase

test "can create a new product" do

visit products_path

click_link 'New Product'

fill_in "Title", with: "Bacon"

fill_in "Description", with: "Chunky bacon."

fill_in "Price", with: "2"

click_button "Create Product"

assert page.has_selector?('p', text: 'Bacon')

assert page.has_selector?('p', text: 'Chunky bacon.')

assert page.has_selector?('p', text: '2')

end

end

[/code]

When you encounter a failure in a high level test, even though your lower level tests are green, it can signify that you have some unit tests that are missing or inappropriate. Before fixing the bug, you should first try writing a unit test that replicates the same bug at the lower level.

Integration tests

These tests check how some parts tie together without necessarily doing a full run-through of the system. One type of integration tests in Rails applications are controller tests. A controller test maps to a single controller and every test performs a single scenario. These tests are typically concerned with the results of a controller action and check the http response code, the redirect location, or the cookies being set.

[code lang="ruby"] # an example of a controller test require 'rails_helper' class ProductsControllerTest < ActionDispatch::IntegrationTest # We are not testing validations, here we are concerned # with the controller behavior. # Also, we are only testing the _sad path_ of POST products, # we have the _happy path_ covered with our acceptance test. test "POST create" do assert_no_difference('Product.count') do post products_url, params: { product: {title: nil}} end assert_equal products_path, path assert_template :new end end [/code]

Unit tests

Unit tests ensure that your classes and components behave as intended, in isolation. These are typically easy to write as they are focused on specifics, and you only need to keep one small part of the system in mind when you work on them. They are also quick to perform, so you can afford to have plenty of them, testing just about every edge case and business logic scenario you can think of.

[code lang="ruby"]

class Product < ApplicationRecord

validates :title, :description, presence: true, profanity: true

end

class ProfanityValidator < ActiveModel::EachValidator

PROFANITIES = %w(boogers snot poop shucks argh)

def validate_each(record, attribute, value)

profanities = Regexp.union(PROFANITIES)

if value.present? && value.downcase =~ profanities

record.errors[attribute] << (options[:message] || "can't contain profanities")

end

end

end

[/code]

The tests for ProfanityValidator and Product are below:

[code lang="ruby"]

require "test_helper"

describe ProfanityValidator do

let(:product) { Product.new }

let(:profanity_validator) { ProfanityValidator.new(attributes: {description: ''})}

test 'record is valid' do

assert_nil profanity_validator.validate_each(product, :description, 'Bacon')

end

test 'record is not valid' do

assert_equal ["can't contain profanities"],

profanity_validator.validate_each(product, :description, 'Boogers')

end

end

describe Product do

test 'valid product' do

product = Product.new(title: 'Bacon', description: 'Chunky bacon!')

assert product.valid?

end

test 'invalid without title' do

product = Product.new(description: 'Chunky bacon!')

refute product.valid?

assert_equal ["can't be blank"], product.errors[:title]

end

test 'invalid without description' do

product = Product.new(title: 'Bacon!')

refute product.valid?

assert_equal ["can't be blank"], product.errors[:description]

end

test 'invalid with profanities in title' do

product = Product.new(title: 'boogers', description: 'Chunky bacon!')

refute product.valid?

assert_equal ["can't contain profanities"], product.errors[:title]

end

end

[/code]

One danger with unit tests is that they can create a sense of false complacency. As they are run in isolation, unit tests alone can often fail to reveal higher level bugs that manifest themselves when different parts of the system have to rely on one another.

All-seeing eye

Even having a well-organized suite of automated tests may not be enough that you’ll sometimes need a last-resort safety net. This is where the all-seeing eye of a human comes in play and takes a good high level look, utilizing manual exploratory tests to verify that the application as a whole successfully fulfills the overall objective of the user.

And last but not least, we need to ensure that the visual design of the app is up to snuff, which is hardly possible to ascertain with an automated test.

Conclusion

Designing your testing suite according to the concept of a test pyramid means that you’ll have many more low level tests than higher level integrations and acceptance tests. The base of the pyramid consists of your unit tests covering your models, views, and whatever else you want to test in isolation. On the other hand, the higher levels of the pyramid verify that those isolated pieces work well with each other. Normally, you won’t need to have that many of the slower, higher level tests.

When you follow BDD, you typically write an end-to-end test describing a successful run-through of the features you are supposed to implement. Of course, it will initially fail. You then break the feature down into separate parts and write your controller and unit tests. According to the TDD principles, you only implement just enough to make your tests pass. In the end, all your tests, including the first feature spec, should be green, which will signify that everything falls into place nicely and the user requirements are being met.

Nice writeup